The expanded lineup of AMD’s 4th generation “Genoa” Epyc server chips – built atop “Zen 4” core and some with the chip maker’s L3-boosting 3D V-Cache – unveiled at a high-profile event in San Francisco this week is quickly making its way into the cloud.

Microsoft and Amazon Web Services both unveiled new additions to their clouds and Oracle laid out plans to do the same with new E5 instances in its Oracle Cloud Infrastructure.

AMD used the Data Center & AI Technology Premiere event – a cheeky name that riffs on and tweaks at the Data Center & AI Group at Intel – to highlight its aggressive datacenter efforts, where the company continues to slowly chip away at rival Intel. According to Mercury Research, Intel’s share of the server market was 82.4 percent in Q4 2022, a year-over-year drop from 89.3 percent. Meanwhile, AMD’s share jumped from 10.7 percent in the last quarter of 2021 to 17.6 percent a year later. We await more current numbers of the first and second quarter of 2023.

Hyperscalers are arming their cloud environments with a growing variety of chips to run their infrastructures and thanks to AMD’s sharp execution over the past several years, its Epyc processors are a big part of that mosaic.

As part of AMD’s Epyc expansion, Microsoft is launching the newest virtual machines in its Azure HPC stable and equipping them with server processors featuring AMD’s virtual caching technology and paired with high-speed InfiniBand connectivity from Nvidia.

The software and cloud giant in November 2022 announced the public preview of the new HX-series and HBv4-series VMs, aimed at such workloads as computational fluid dynamics (CFD), rendering, AI inference, molecular dynamics, and weather simulation. At the time, the VMs were powered by AMD’s 4th-generation Epyc “Genoa” processors.

For the general availability of the VMs this week, they were upgraded to the 4th Gen Epyc processors that come with AMD’s 3D V-Cache technology (codenamed “Genoa-X”). 3D V-Cache is an advanced packaging technique that enables AMD to put another 64 MB of L3 cache on each die, bringing the total for each to 96 MB, or 768 MB of total L3 cache.

With more of the workload cached in L3, the pitch is that throughput for bandwidth-intensive workloads is significantly ramped up, giving such processes as integer math faster access to memory.

Jyothi Venkatesh, senior product manager of Azure HPC, and Fanny Ou, senior technical program manager, wrote that the 2.3 GB of L3 cache per VM in the new offerings deliver up to 5.7 TB/sec of bandwidth, amplifying up to 780 GB/sec of bandwidth from main memory. The result: an average of 1.2 TB/sec of effective memory bandwidth.

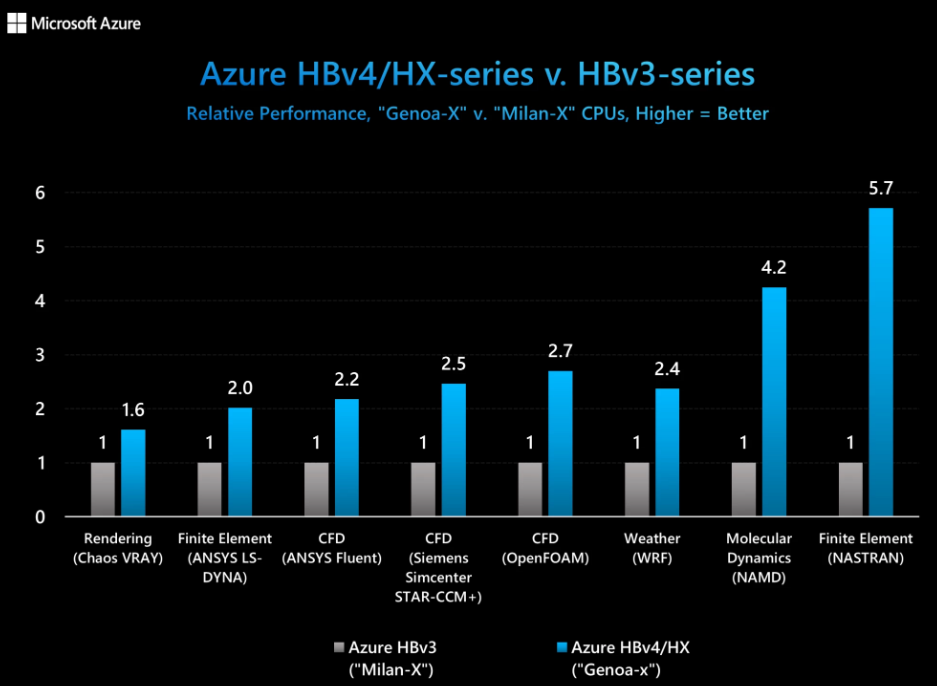

According to Microsoft’s internal testing, for popular memory bandwidth-bound workloads like OpenFOAM – open-source computational fluid dynamics (CFD) software – that means up to 1.49 times higher performance than VMs with standard 4th Gen Epycs. There also are significant performance jumps with the HBv4- and HX-series when compared with the previous HBv3-series power by the Milan-X processors, as seen below.

Speaking with AMD chief executive officer Lisa Su at the company’s event, Nidhi Chappelle, general manager of Azure, HPC, AI, SAP, and confidential computing at Microsoft, laid out other gains that the new VMs will offer, from twice the compute density and 4.5X faster HPC workloads in the HBv4 VMs and 6x performance jump for data-intensive workloads on the HX virtual machines.

“For a lot of these customers, what this means is they can now fit a lot of their existing workflows either on the same number of cores or fewer number of cores and overall have a much better total cost of ownership because they save a lot on the software licenses,” Nidhi said.

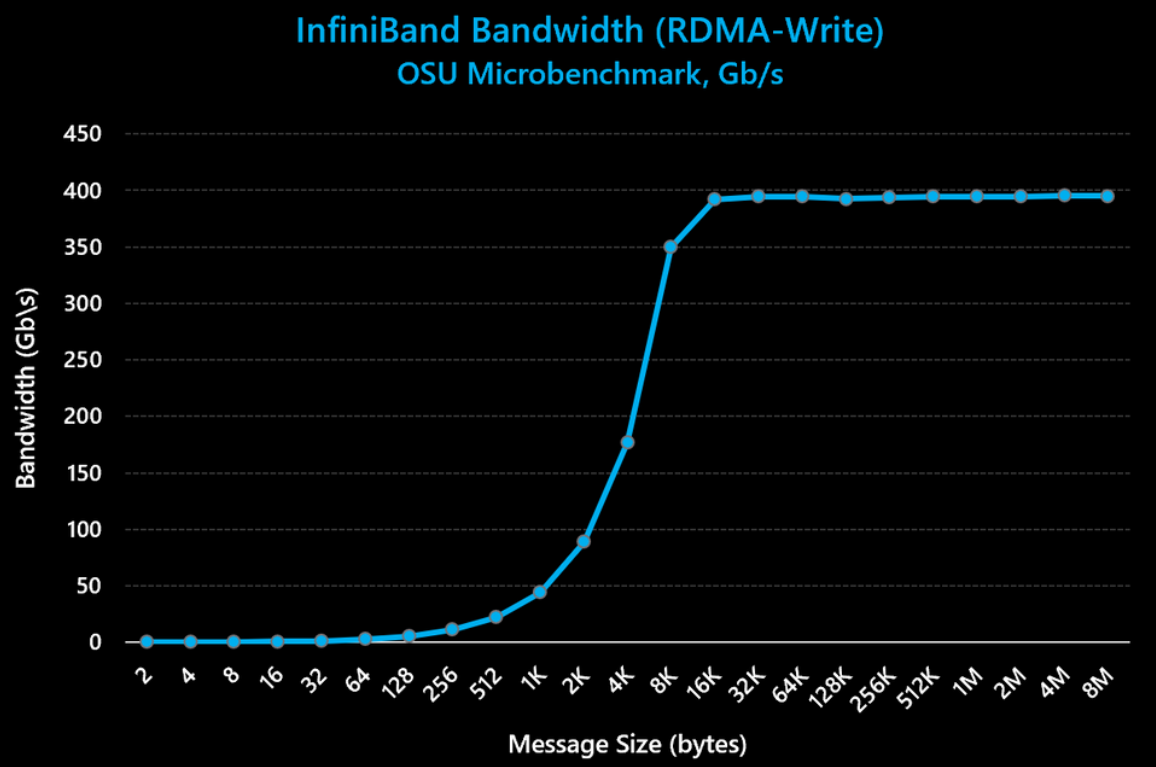

Microsoft detailed other technologies coming to the new VMs, including 400 Gb/s Quantum-2 InfiniBand from Nvidia, 80 Gb/sec Azure Accelerated Networking, and 3.6 TB of local NVM-Express SSD, delivering up to 12 GB/sec of read and 7 GB/sec of write storage bandwidth.

The new HX and HBv4 series VMs will be coming soon to the East US region and after that South Central US, West US3, and West Europe regions.

For its part, AWS – which started introducing Epyc-based cloud instances in 2018 and has since launched more than 100 such instances – later this year will launch a new generation of Amazon EC2 M7a instances powered by the new AMD chips and will deliver a 50 percent performance boost over current M6a instances. The new instances are available in preview and will be generally available in the third quarter.

The M7a instances support AVX-512 for high performance computing and FP16 and BFloat16 half-precision for machine training and inference. The M7a instance also includes DDR5 memory, which brings 50 percent higher memory bandwidth than DDR4. Such capabilities will “enable customers to get additional performance and bring an even broader range of workloads to AWS,” Dave Brown, vice president of Amazon EC2 at AWS, said while on stage with Su.

Brown also said that the cloud giant – which holds 32 percent of the cloud infrastructure services market, followed by Azure at 23 percent and Google Cloud at 10 percent – plans to expand the number of EC2 instances based on Genoa in the future.

Oracle said its upcoming E5 instances with the latest AMD chips will become generally available in second half of the year, with Donald Lu, senior vice president of software development at OCI, saying that organizations using AMD-based instances on its platform are collectively saving $40 million a year.

“With the next-generation of AMD processors powering our OCI Compute E5 instances, we’re offering our customers the ability to run any workload faster and more efficiently,” Lu said.

"chips" - Google News

June 16, 2023 at 08:55PM

https://ift.tt/o1cL6W9

The Big Clouds Get First Dibs On AMD “Genoa” Chips - The Next Platform

"chips" - Google News

https://ift.tt/EHshfd8

https://ift.tt/5GHoBuC

Bagikan Berita Ini

0 Response to "The Big Clouds Get First Dibs On AMD Genoa Chips - The Next Platform"

Post a Comment