With some 80 AI chip vendors and startups in the marketplace, every company says they have a better idea when it comes to building AI chips for a wide range of industries and uses.

For SimpleMachines, a three-year-old fab-less AI chip designer that's taking on chip giants like Nvidia and Marvell (as well as a wide-open field of startups like themselves), it's all about what they say is a different approach to solving AI computing challenges.

That begins with what the company calls an algorithm-adaptive AI chip that can be customized by programmers to quickly and seamlessly meet the needs of their workflows on the fly, said Karu Sankaralingam, SimpleMachines' founder and CEO.

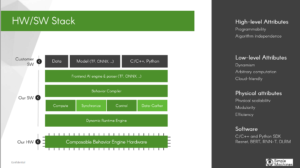

This idea led to Mozart: the company’s first AI chip, which is optimized for inference and includes a 16-nm design, HBM2 high-bandwidth memory and a PCIe Gen3x16 form factor. Mozart, Sankaralingam told EnterpriseAI, is sampling now and is being evaluated by customers. Built for SimpleMachines by chip maker TSMC, Mozart AI chips run with a complete software and hardware stack that allows data scientists to run their programs without worrying about what’s under the hood, he said.

What makes Mozart stand out in the world of AI chips is that it can run multiple AI models simultaneously on one chip, said Sankaralingam.

“That happens because our chip is internally broken up into 64 different modules, and if necessary, the programmer can address it and run 64 different applications or models at the same time,” he said. “And then a developer could write 64 different models and can be running off 64 modules, if they want, simultaneously on one piece of silicon.

Mozart’s software stack includes direct TensorFlow support as well as APIs for C/C++ and Python, making it familiar to use for programmers and data scientists.

Mozart is available for purchase in its PCIe card form, called Accelerando, or it can be used via SimpleMachines’ Symphony Cloud Service, which has access to public clouds like Azure, Google Cloud Platform, and AWS in what’s essentially an AI-as-a-Service delivery model. Initial tests show use cases for recommendation engines, speech and language processing, and image detection, which can run simultaneously. The Accelerando cards with Mozart chips will be sold in systems from standard OEMs like Dell and Supermicro in the future.

A Different Approach

Karl Freund, a senior research analyst for HPC and machine learning with Moor Insights & Strategy, said he’s seen more than a dozen unique AI chip designs in what he calls the “Cambrian Explosion” in AI chips and software in recent years, but that the Mozart design from SimpleMachines is different than most.

“Like all AI startups, they claim awesome performance, but it is too early to verify their assertions,” said Freund. “I was impressed with their memory architecture, which seems elegant and quite simple, which should make the heavy lifting of software a bit easier to tackle. On the software front, they still have a lot of work to do, such as support for PyTorch. But they seem to be heading in the right direction and merit further investigation as their platform matures.”

Constant Changes in AI Chip Requirements

One of the inspirations for the new Mozart AI chip was Sankaralingam’s ongoing research that pointed to the need for a new class of architecture that's ready for the big data era of today, he said. Sankaralingam is also a computer science professor at the University of Wisconsin-Madison, where he has taught for 14 years.

Current chip platforms, even including custom chips built for specific requirements, just aren’t meeting those needs, said Sankaralingam. “Applications are exploding in diversity, so it's not just processing images anymore. It's text to speech times serious data, and it's changing fast and growing, then breaking through every six months. The opportunity is they need a lot of compute efficiency, and they're underserved by GPUs. These trends send us to this point that purpose-built, custom chips for one AI problem are obsolete on arrival.”

With Mozart, those challenges are resolved because project needs can be changed on the chips by reprogramming using the chip’s deep software stack on the fly, he said.

“In our discussions with customers, they've all said how different companies are using different models, and many companies are using many models,” he said. “It leads us to look again at our hypothesis on what is necessary for AI compute. Horsepower and efficiency are not the only metrics -- the other important one is being algorithm-adaptable. The reason GPUs are where they are being because they're very adaptable.”

Using a different approach, SimpleMachines’ strategy is to ignore what the applications are trying to do and look to the algorithms, said Sankaralingam. “We observed that many or all of these algorithms fundamentally break up into four behaviors – data-gather, computation, control and synchronization. We can use those behaviors to categorize the algorithm. We then build a compiler that can decompose an application into those behaviors and feed that information to our hardware.”

Then the hardware, instead of having instruction, fetch, branch breakers, and caches, implements those four behaviors, he said.

“That's all we need to program them where they are, which as a programmer you can write in TensorFlow, we extract out those behaviors and map them onto our silicon,” said Sankaralingam. “That's a big parallel shift.”

Mozart’s architecture abstracts any software application into a small number of defined behaviors and then uses SimpleMachines’ own compiler to integrate into the backend of standard AI frameworks like TensorFlow. That allows the architecture to translate those programs and reconfigure the hardware on the fly, resulting in a chip that behaves as if it were originally designed for that application, according to the company.

SimpleMachines sees opportunities for its Mozart AI chips in public data center, network security, finance, and insurance markets, with additional opportunities in the future in edge and mobile device markets for upcoming products on its roadmap.

Busy AI Chip Marketplace

The custom AI chip market is a very busy place. In September Arm chip maker Marvell Technologies announced that it is moving its newest chip platform, the ThunderX3 line, to a custom-only chip business that can serve any customer and product need. Marvell said it sees custom chips as the wave of the future as customers clamor for custom-built processors to power a wide range of routers, switches, 5G hardware, IoT devices, appliances, and other hardware.

Related

"chips" - Google News

November 26, 2020 at 05:26AM

https://ift.tt/2UZpyAm

AI Chip Startup SimpleMachines Wants You To Love Mozart - EnterpriseAI

"chips" - Google News

https://ift.tt/2RGyUAH

https://ift.tt/3feFffJ

Bagikan Berita Ini

0 Response to "AI Chip Startup SimpleMachines Wants You To Love Mozart - EnterpriseAI"

Post a Comment